'Reverse Inference' in deep neural networks

Trying to google the term Reverse Inference usually points you to neuropsychology articles. The articles usually refer to methods that try to identify one's mental state from the observed brain activity. The methods mostly involve MRI of human brain that is used for more or less successful interpretation of patient's ongoing cognitive process

https://www.sciencedirect.com/science/article/pii/S1053811913000141

I have borrowed the fancy term for much simpler, while similar, problem.

Neuroscientists ask: I know very little about my patient's brain, but how do I know what he/she feels or sees when the brain's MRI looks like this?

My simple question is: I'm given a trained DNN, what the net sees on its input while I get this particular output?

There might be more approaches to such DNN 'reverse engineering'. A very convenient method I used is based on GAN (Generative Adversarial Network). While GAN's primary purpose is to train simultaneously both detector and generator, in our case the detector has been fully trained already and the training set in unknown.

For the following example we train a CNN on standard MNIST handwritten digits dataset. For an example of regular GAN on the same dataset, see: https://machinelearningmastery.com/how-to-develop-a-generative-adversarial-network-for-an-mnist-handwritten-digits-from-scratch-in-keras/

Google colab offers a very convenient environment for our experiment, so the following code extracts are optimized for it. Let's start with training a CNN on the MNIST dataset to crate discriminator model:

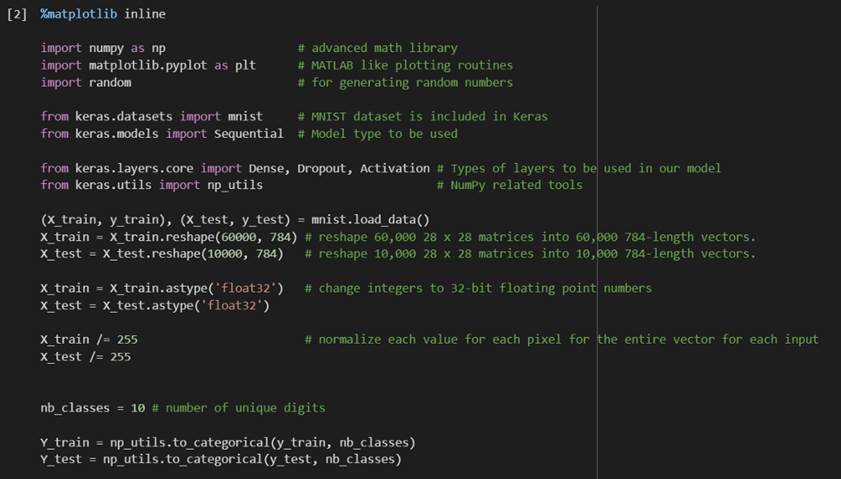

First, we prepare the environment and the training data:

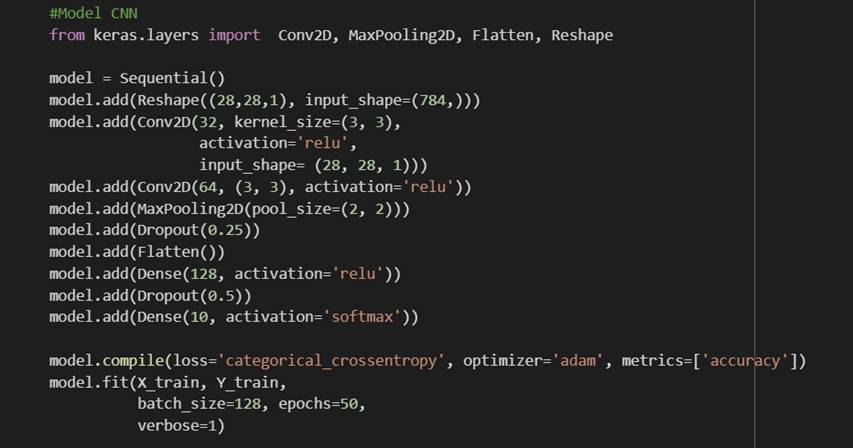

The model for detector (discriminator) is defined as CNN and trained on the MNIST dataset:

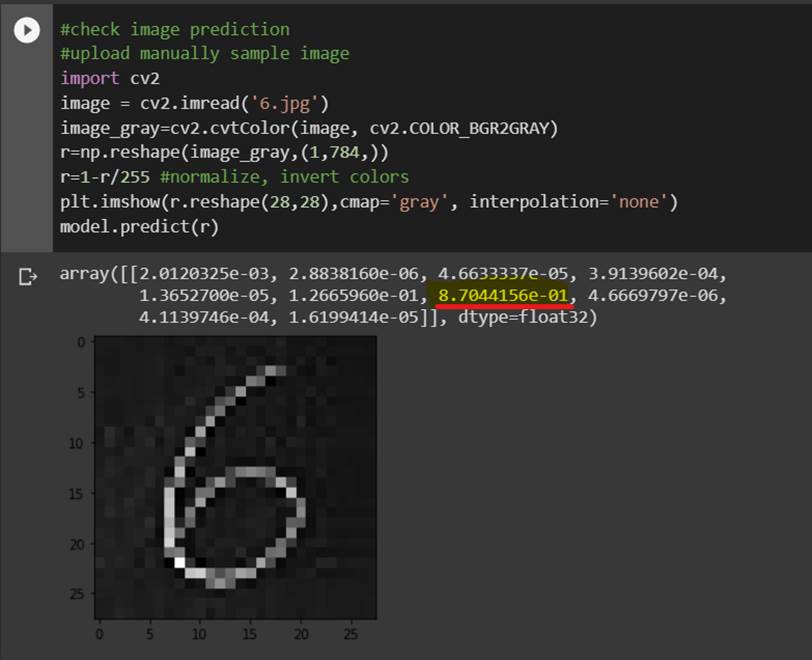

Let's check if the trained model recognizes a custom uploaded image:

The result is quite ok, the detected class actually corresponds with number 6.

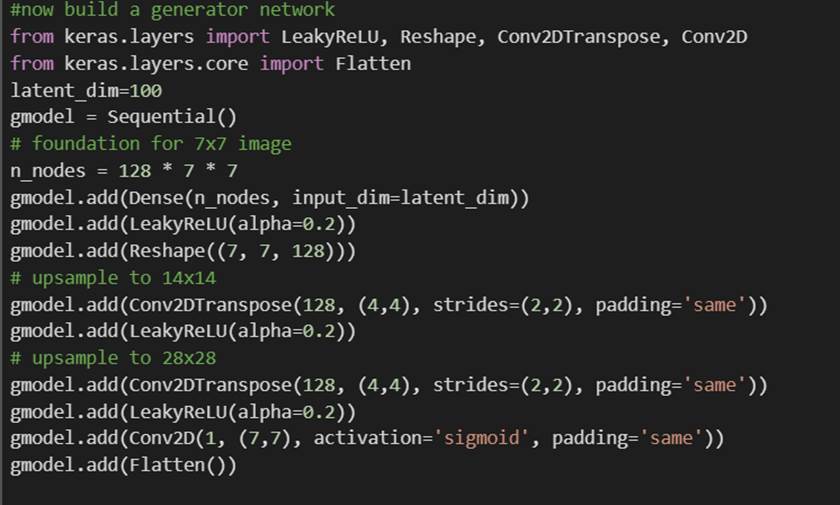

Now we have a reasonably trained model, let's try to build a generator network for creating images.

For this example the network layout copies the generative part of the above referenced GAN model.

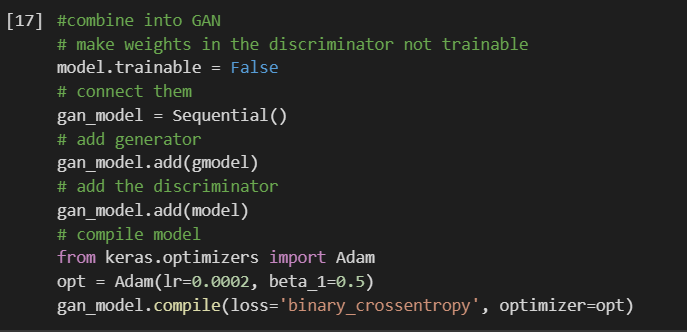

now combine the 2 models together

and start the training of the generator model (note the discriminator model is set as not-trainable).

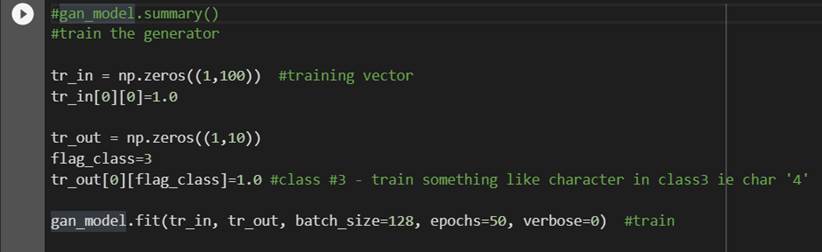

Here we try to get a result for class 3 (in MNIST model the character '4')

Now we can get a generated - just run inference on the latent space vector with the same value used for generator model training tr_in = [1,0,0,0,0,...]

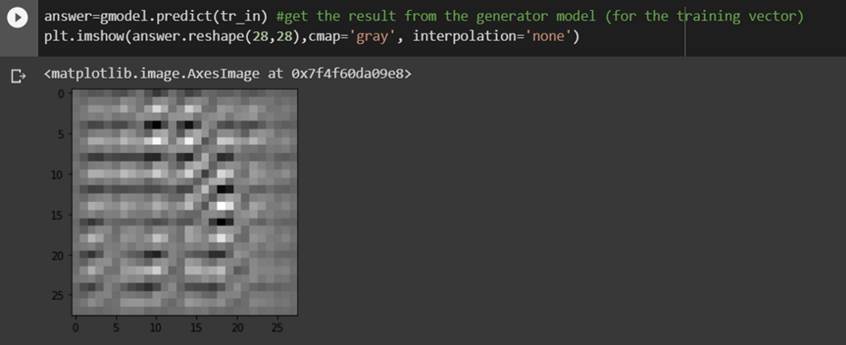

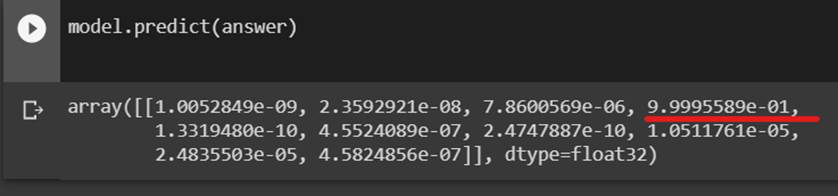

The generated image doesn't look much like handwritten number '4', so let's try how the MNIST trained model recognizes it.

The detection looks pretty accurate though the generated result doesn't resemble images of the dataset at all.

Due to random initialization, repeated training and prediction gives different results, all of them passing successfully the detection in the discriminator model.

The result above shows the generator training with the backpropagation from the existing trained model can easily create input data fitting the trained detection model, however the images are not plausible. They generally don't come even close to the training dataset images. That's why the full GAN concept relies on the training dataset to differentiate between generated images that fit the discriminator model, but significantly differ from the expected input.

The technique can be easily misused in adversarial attack against the model, since the seemingly random noise image would be detected as specific class with high confidentiality close to 100%.

The full colab notebook can be downloaded here: https://gist.github.com/gogela/974673c9d117962d10fe3a3409099d45